A Terraform Provider Proof-of-Concept

We've been working on a Terraform provider for Nitric to bring Infrastructure from Code to Terraform users. We have a proof-of-concept available here as a public repository, so you can check it out and play around with it. I’ll describe what we’ve built and some of our design choices below, and we welcome your feedback and ideas! You can also watch a video demo of the proof-of-concept.

Official Terraform Providers for AWS and GCP are coming soon, so stay tuned for those as well.

What the Proof-of-Concept Does

Nitric leverages Infrastructure from Code, which means it creates a resource specification listing all of the resources and how they're related to each other. Nitric Providers iterate through all of the resources, collect them and then map them to some Terraform module or IaC module that provisions the infrastructure.

In this Terraform Provider proof-of-concept (PoC), we've built out the scaffolding for the Python code, which sets up all of the deployment, the mapping between the IfC and the IaC. And then we have a scaffold of the modules that actually implement that infrastructure for you.

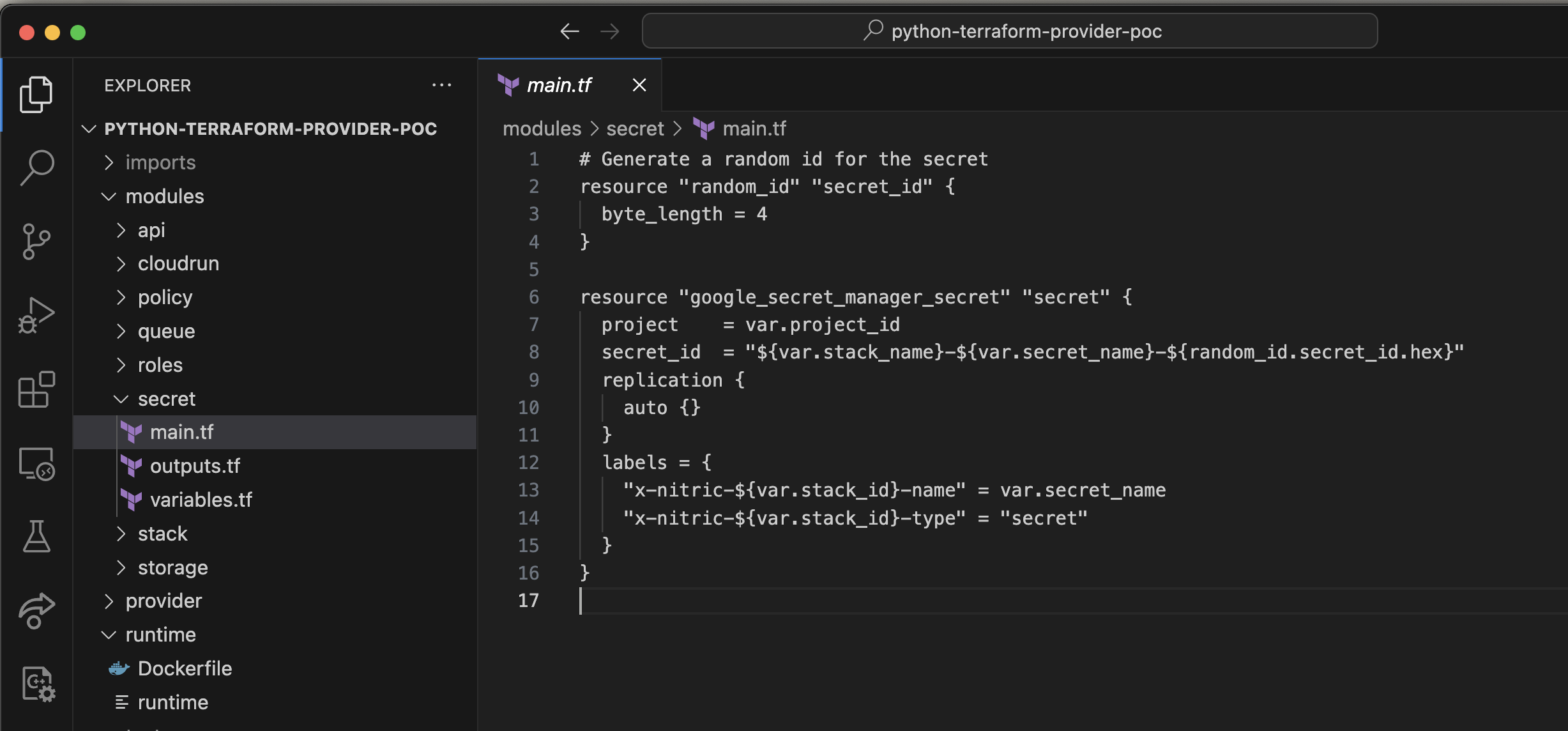

Defining Terraform Modules

Let’s look at the Terraform modules. We’ll take secrets as an example. We’ve got a simple Terraform module that defines a random ID, takes in the project ID and then sets up a replication pattern for the secret with some labels that we're defining.

Mapping Providers to Modules

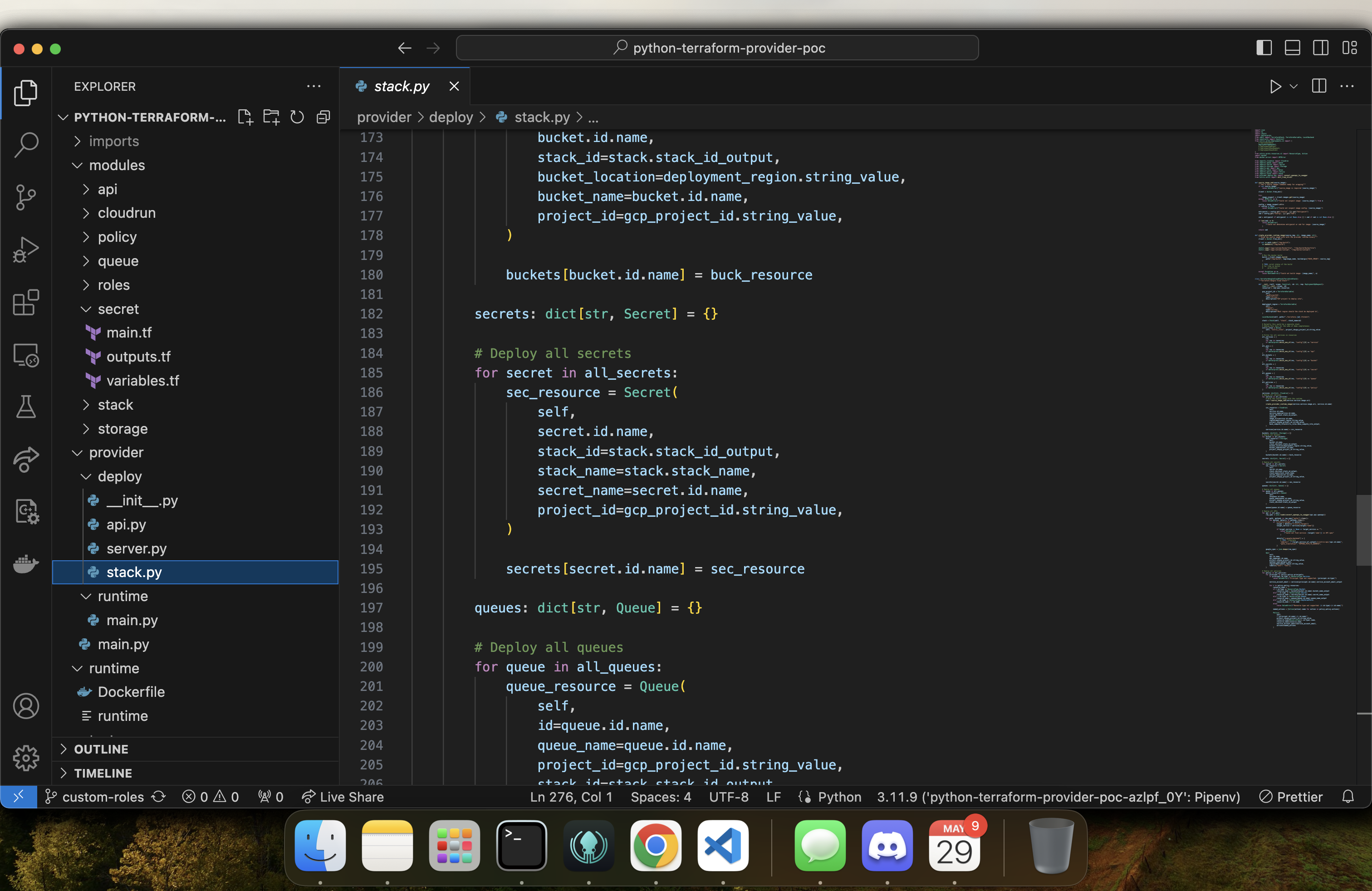

That’s a pretty straightforward Terraform script that deploys a secret. To make this deploy every time someone declares a secret in IfC, we need to orchestrate it, so we've written some Python code. In the provider folder, we'll have a stack resource. And the stack resource is responsible for collecting all of your secrets, for example.

Demo of Nitric IfC with Terraform

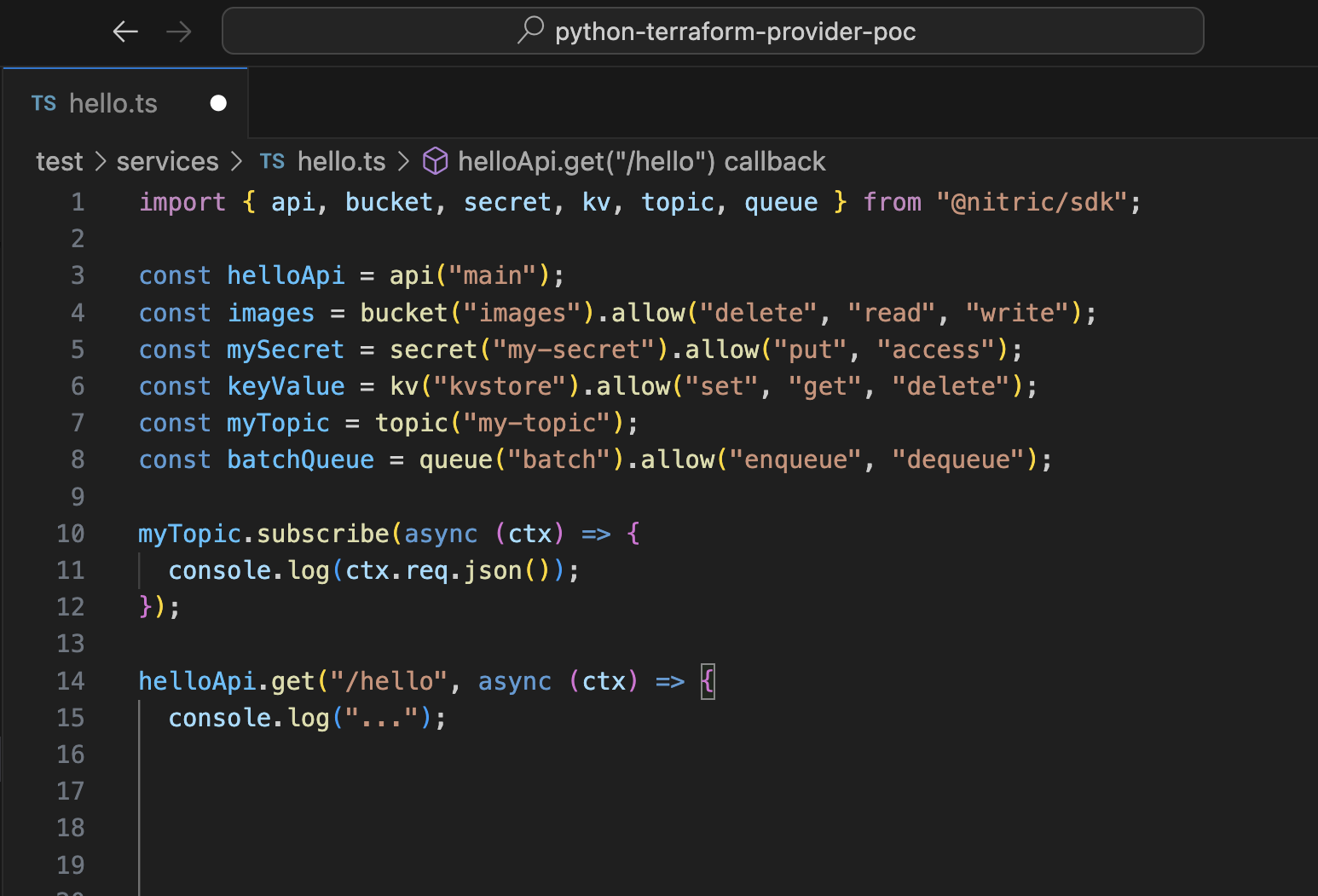

I’ve created a project using the Nitric standard CLI called test. That has a services folder with a hello TS in it, but I haven’t really implemented any application code here. I'm just declaring all of the resources and giving their intention.

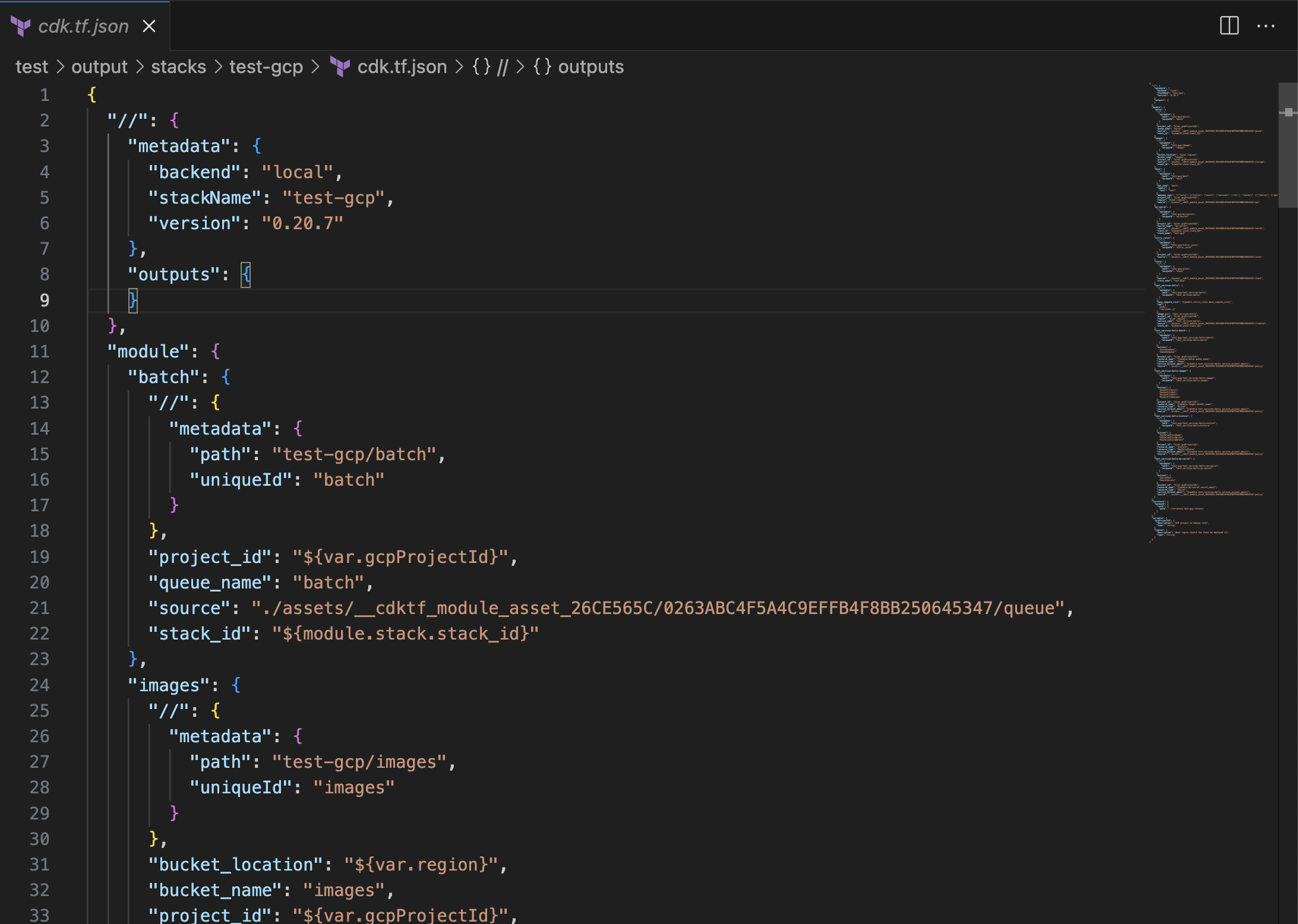

When I run nitric up, I can pick from the standard Nitric Provider, which I've called GCP core, or the Terraform provider. The Terraform provider config tells it to use the Terraform CDK provider, which we've built in this project. So when I run it, it takes the modules that I've defined in this folder and does a scan over my application code, figures out how to provision these resources and builds an output Terraform stack.

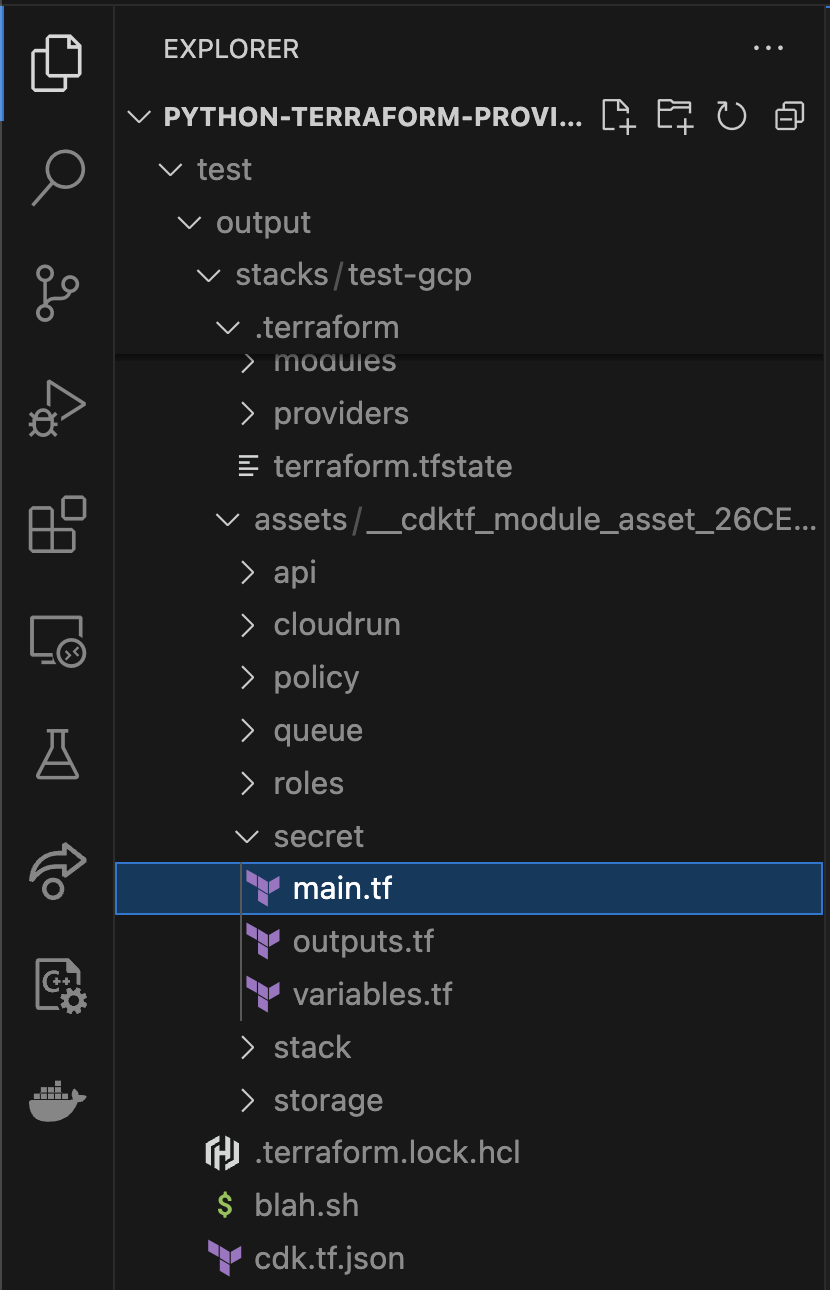

We use CDKTF to help with this process, and we end up with a stack in the output folder. In the GCP folder in that stack, you'll see that I've got an assets folder and it's copied those resources that you've seen in the modules directory.

Deploying

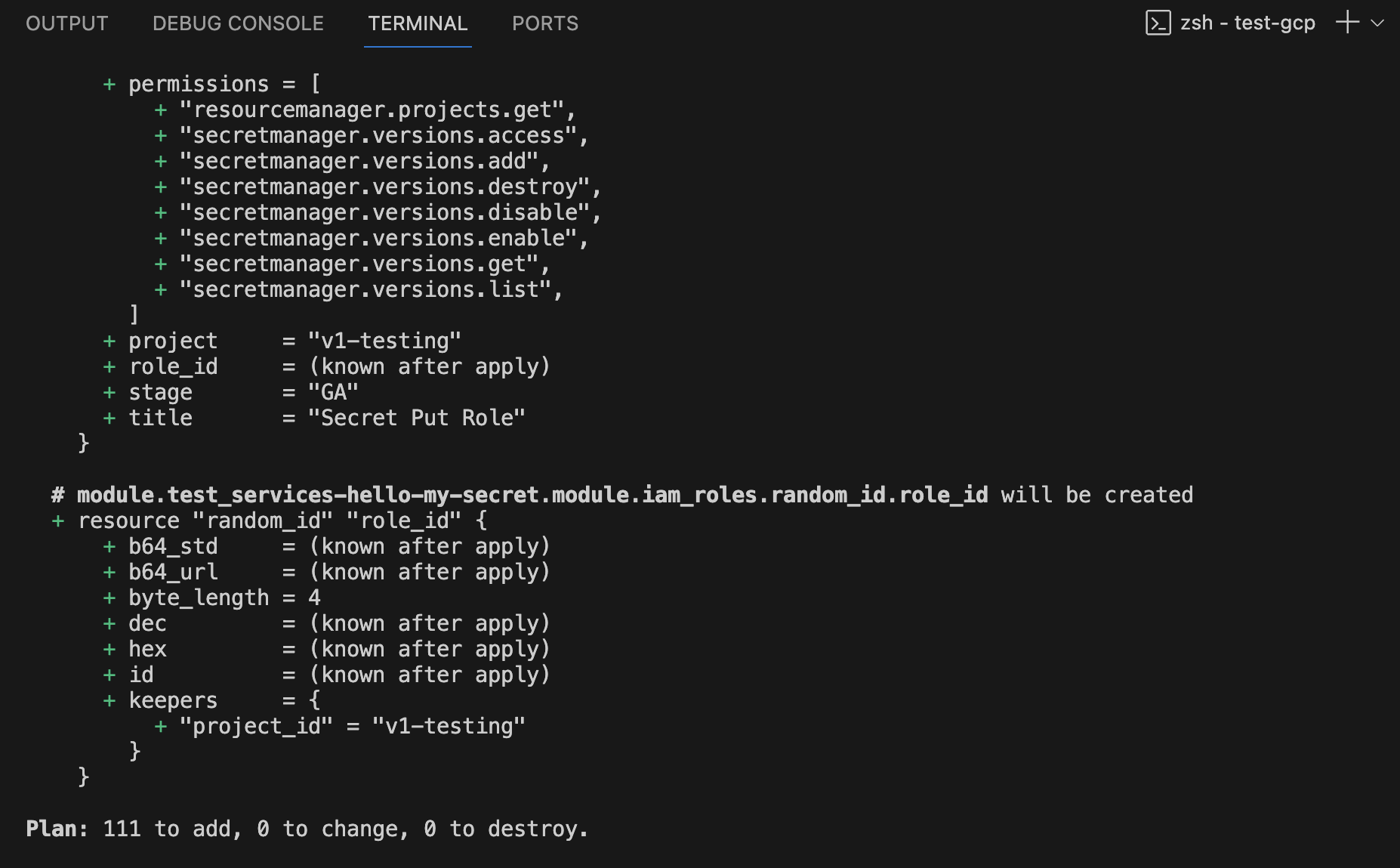

Since this is a Terraform project at this point, I can go into that directory, go into that stack, and I can do a terraform init, then a terraform deploy or terraform plan.

I'll do a plan, and it will show you the differences between my current state and my changed state.

That summarizes how we've created a Terraform module project and a demo that shows you how to deploy IfC to GCP using Terraform and Nitric.

Note: In this proof-of-concept, not all resources of Nitric are supported. The modules are built out for queues, for secrets, for storage and for the API gateway itself. We haven't done events and schedules yet, so you won't be able to use those resources.

PoC Design Choices

What’s the rationale for using CDKTF instead of SDK v2 or the Terraform plugin framework?

Terraform CDK allows you to output your Terraform scripts, which means that teams who have an existing workflow, where they have static analysis happening against the Terraform, can keep doing what they’re doing today without too much change.

With Nitric’s Pulumi plugins your provision time is completely automated. Pulumi works that way; it doesn't really have an output. With Terraform, the way a lot of people use it today is outputting these scripts. They do version control on the scripts, and they do a lot of static analysis to make sure that their governance is met. We took an approach with this PoC to fit into that flow; you get the Terraform resources baked and exported to use as part of your governance checks and validations. But it’s possible to take other approaches for a Nitric Terraform Provider.

Is this proof-of-concept still extensible to use the Terraform plugin framework or SDK to automate deployment as well?

Nitric Custom Providers are super extensible for any framework. This PoC shows how you can use Python as the orchestration language, Google as the cloud provider, and CDKTF for Terraform IaC. But it's flexible to support any tool or framework you prefer. The approach with this PoC aims to maintain modularity and flexibility, which are core principles in the Nitric framework. We’ve purposely designed it so that if you want to use a different IaC provider in the future, you can easily change that out. Nitric becomes the abstraction layer that separates you from the end state provider. The decoupling between IfC definition, resource specification and the actual provider allows for that flexibility.

The PoC generates Terraform code based on infrastructure rather than fully automating the deployment. Is this fully IfC?

We're still inferring the required infrastructure from the application and building a resource specification on that, and that resource specification is still being implemented by providers as real infrastructure. So that maintains a lot of the IfC experience you get with the Nitric standard providers.

The difference with this PoC is that there’s a manual step to apply the Terraform because we aimed to maintain flexibility. So we left this step to allow you to get your hooks in there and make sure that it works the right way up front. But you can incorporate the deployment step as part of your CI/CD pipeline, so it doesn’t have to be manual if you don’t want it to be.

When you deploy, how do you configure the authentication to Google Cloud?

For this PoC, as part of the required providers in the Terraform, it's pulling from a JSON file, which is just a service account configured with permissions. We're also applying the appropriate permissions to do the actual accessing the resources. We’re also enabling the required services and setting up the required Firebase database for key value storage. So there's a lot of goals and policies that are happening directly in the Terraform as well.

Would this PoC work in a cloud-agnostic way?

Nitric Providers are cloud specific, but they can be built for any cloud. This PoC targets GCP, but it would be very easy to create an AWS provider; very little would change. The main changes would need to be in the Terraform modules, which is where the differences apply. The remainder of the provider code would be pretty similar. So by creating additional providers targeting whichever clouds you want, then you can deploy in a cloud-agnostic way, simply by choosing the providers when you run nitric up.

How does this work if I have existing Terraform modules?

The resource specification that Nitric creates is basically a list of resources (secrets, queues, CloudRun, API gateway, etc.), their relationship to each other and a specification of intention, how you're going to use that resource (read, write, delete, etc.). How you interpret that specification is completely within your control. If you’ve got 10 secrets that you need to deploy, it’s completely up to you how you pass through that specification and which parts you choose to use or ignore. If you wanted to deploy all the secrets alongside your API instance, you can – not a realistic scenario, you probably wouldn't do that, but you can do that.

Does the PoC have code validations?

No, there's no validation done in the proof-of-concept. In the v1 Nitric Framework, we have a test suite that tests the resource specification generation. When we publish officially supported Terraform providers, we’ll have a test suite. That test suite will validate the output of both the specification and of the providers. Many people also like to do static analysis of Terraform, which is easy to introduce as part of your CI/CD pipeline or even as part of the provider itself. So the architecture team can also choose one of those options.

This PoC hopefully demonstrates the flexibility of Nitric custom providers. Play around with the PoC here and let us know what you think. If you want to chat or learn about the official Terraform providers in the works, jump into our Discord.